GSoC 2022

Robotics applications are typically distributed, made up of a collection of concurrent asynchronous components which communicate using some middleware (ROS messages, DDS…). Building robotics applications is a complex task. Integrating existing nodes or libraries that provide already solved functionality, and using several tools may increase the software robustness and shorten the development time. JdeRobot provides several tools, libraries and reusable nodes. They have been written in C++, Python or JavaScript. They are ROS-friendly and full compatible with ROS-Noetic, ROS2-Foxy (and Gazebo11).

Our community mainly works on four development areas:

-

Education in Robotics. RoboticsAcademy is our main project. It is a ROS-based framework to learn robotics and computer vision with drones, autonomous cars…. It is a collection of Python programmed exercises for engineering students.

-

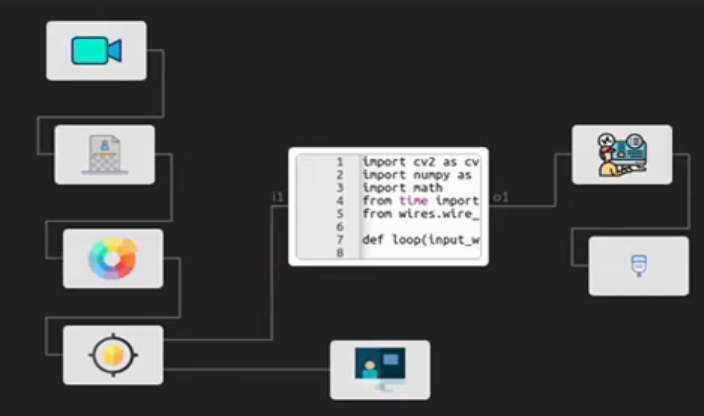

Robot Programming Tools. For instance, VisualCircuit for robot programming with connected blocks, as in eleectronic circuits, in a visual way.

-

MachineLearning in Robotics. For instance, DeepLearningStudio to explore the use neural networks for robot control. It includes the BehaviorMetrics tool for assessment of neural networks for autonomous driving. RL-Studio, a library for the training of Reinforcement Learning algorithms for robot control. DetectionMetrics tool for evaluation of visual detection neural networks and algorithms.

-

Reconfigurable Computing in Robotics. FPGA-Robotics for programming robots with reconfigurable computing (FPGAs) using open tools as IceStudio and Symbiflow. Verilog-based reusable blocks for robotics applications.

Selected contributors

In the year 2022, the contributors selected for the Google Summer of Code have been the following:

Pratik Mishra

Robotics Academy: migration of several exercises from ROS1 to ROS2 and refinement

Apoorv Garg

Robotics Academy: improvement of the web templates using powerful frontend technologies

Ideas list

This open source organization welcomes contributors in these topics:

Project #1: Robotics Academy: optimization of exercise templates

Brief Explanation: Robotics-Academy is a framework for learning robotics and computer vision. It consists of a collection of robot programming exercises. The students have to code in Python the behavior of a given (either simulated or real) robot to fit some task related to robotics or computer vision. It uses standard middleware and libraries such as ROS or openCV.

For each exercise there is a webpage (exercise.html) and a Python template (exercise.py), both connected through websockets. Exercise.py typically connects to the robot sensors and actuators in the Gazebo simulator, connects to the webpage to show the Graphical User Interface and runs the robot’s brain. That brain has been edited by the user at the webpage. Currently the Python template is based in Threading, one possible optimization is to implement it with subprocesses or Python Multiprocessing to take full advantage of the multiple cores of user computer. Another desired optimization is to make full use of the GPU acceleration at user computer from the docker container of RoboticsAcademy.

- Skills required/preferred: Python programming skills, Python subprocesses, shared memory

- Difficulty rating: medium

- Expected results: more fluent execution of RoboticsAcademy exercises

- Expected size: 175h

- Mentors: David Roldán (david.roldan AT urjc.es) and Sakshay Mahna ( sakshum19 AT gmail.com )

Project #2: Robotics Academy: consolidation of drone based exercises

Brief Explanation: Robotics-Academy is a framework for learning robotics and computer vision. It consists of a collection of robot programming exercises. The students have to code in Python the behavior of a given (either simulated or real) robot to fit some task related to robotics or computer vision. It uses standard middleware and libraries such as ROS or openCV.

Currently there are 9 drone exercises available, all as web-templates (RoboticsAcademy v3.2) and two more are about to come. Project’s goal is to consolidate the existing exercises, improving front-end appealing and extending drones back-end (PX4 and JdeRobot’s dronewrapper) with new functionalities, such as adding support to new control methods or new sensors (IR, depth cameras, etc). The project may also explore the develompent of new drone exercises or extending support to real drones.

You will work with Docker, Gazebo-11 and ROS-noetic. Also, using ROS2 for these exercises will be explored.

- Skills required/preferred: Python, OpenCV, ROS, HTML, CSS and Django.

- Difficulty rating: medium

- Expected results: ROS-based drones exercises.

- Expected size: 175h

- Mentors: Pedro Arias (pedroariasperez96 AT gmail.com) and Arkajyoti Basak (arkajbasak121 AT gmail.com)

Project #3: Robotics Academy: improvement of autonomous driving exercises

Brief Explanation: Robotics-Academy is a framework for learning robotics and computer vision. It consists of a collection of robot programming exercises. The students have to code in Python the behavior of a given (either simulated or real) robot to fit some task related to robotics or computer vision. It uses standard middleware and libraries such as ROS or openCV.

Currently there are four exercises regarding autonomous driving exercises in RoboticsAcademy: local navigation, global navigation, autoparking and car junction. The car models have to be improved to be more realistic, use Ackermann control and LIDAR sensor onboard. ROS2 use for these exercises will be explored. Tentative solutions will be also developed.

- Skills required/preferred: Python programming skills, Gazebo.

- Difficulty rating: medium

- Expected results: more realistic ROS-based autonomous driving exercises

- Expected size: 175h

- Mentors: Luis Roberto Morales (lr.morales.iglesias AT gmail.com), José María Cañas (josemaria.plaza AT gmail.com)

Project #4: Robotics-Academy: improve Deep Learning based Human Detection exercise

Brief Explanation: Robotics-Academy is a framework for learning robotics and computer vision. It consists of a collection of robot programming exercises. The students have to code in Python the behavior of a given (either simulated or real) robot to fit some task related to robotics or computer vision. It uses standard middleware and libraries such as ROS or openCV.

The idea for this project is to improve the “Human detection” Deep Learning exercise at Robotics-Academy, developed along GSoC-2021. Instead of asking the user to code the solution in a web-based editor, he or she will have to upload a deep learning model that matches video inputs and outputs the image coordinates of the detected humans. In this project, the support for neural network models in the open ONNX format, which is becoming a standard, is one goal. The exercise fluent execution is also a goal, hopefully taking advantage of GPU at the user’s machine from the RoboticsAcademy docker container. An automatic evaluator to test the performance of the network provided by the user will be also explored. All the above functionalities have, to some extent, already been implemented in the exercise. The goal is to improve/refine them either on the existing implementation or from scratch. In short, the scope of improvements in the exercise include:

- Custom train or find an enhanced DL model trained to detect only humans specifically. Changes to the pre-processing and post-processing part would have to be made as per the input and output structure of the new model.

- Enhancing the model benchmarking part in terms of its interpretability, use case, accuracy, and visual appeal to the user.

- Enabling GPU support while executing the exercise from the docker container.

- Fluent exercise execution.

- Other scopes of improvements are also welcome. This may include adding/modifying features and making the exercise more user-friendly and easy to use.

- Skills required/preferred: Python, OpenCV, PyTorch/Tensorflow

- Difficulty rating: medium

- Expected results: a web-based exercise for solving a visual detection task using deep learning

- Expected size: 175h

- Mentors: David Pascual (d.pascualhe AT gmail.com) and Shashwat Dalakoti (shash.dal623 AT gmail.com)

Project #5: Robotics-Academy: new exercise using Deep Learning for Visual Control

Brief Explanation: Robotics-Academy is a framework for learning robotics and computer vision. It consists of a collection of robot programming exercises. The students have to code in Python the behavior of a given (either simulated or real) robot to fit some task related to robotics or computer vision. It uses standard middleware and libraries such as ROS or openCV.

The idea for this project is to develop a new deep learning exercise for visual robotic control within the Robotics-Academy context. We will build a web-based interface that allows the user to input a trained model that matches as input the camera installed on a drone or a car, and as outputs the linear speed and angular velocity of the vehicle. The controlled robot and its environment will be simulated using Gazebo. In this project, we will:

- Update the web interface for accepting models trained with PyTorch/Tensorflow as input.

- Build new widgets for monitoring results for the particular exercise.

- Get a simulated environment ready.

- Code the core application that will feed the trained model with input data and send back the results.

- Train a naive model that allow us to show how the exercise can be solved.

This new exercise may reuse the infrastructure developed for the “Human detection” Deep Learning exercise. The following videos show one of our current web-based exercises and a visual control task solved using deep learning:

- Skills required/preferred: Python, OpenCV, PyTorch/Tensorflow, Gazebo

- Difficulty rating: medium

- Expected results: a web-based exercise for robotic visual control using deep learning

- Expected size: 175h

- Mentors: David Pascual ( d.pascualhe AT gmail.com ) and Pankhuri Vanjani (pankhurivanjani AT gmail.com)

Project #6: Robotics-Academy: improvement of the web templates using powerful frontend technologies

Brief Explanation: Robotics-Academy is a framework for learning robotics and computer vision. It consists of a collection of robot programming exercises. The students have to code in Python the behavior of a given (either simulated or real) robot to fit some task related to robotics or computer vision. It uses standard middleware and libraries such as ROS or openCV.

For each exercise there is a webpage (exercise.html) and a Python template (exercise.py), both connected through websockets. The webpages are the Graphical User Interface (GUI) of each RoboticsAcademy exercise and they use several web templates. The browser connects with the Docker image application in order to run the simulation and to allow the users to send their code and see the results of the simulation. Nowadays, the web-templates have been developed using plain HTML, Javascript and CSS and we aim to improve them by using advanced frontend technologies such as React, Vue, Angular or Flutter.

- Skills required/preferred: Python, JavaScript, HTML, CSS

- Difficulty rating: medium

- Expected results: Upgrade the current web templates of all exercises to use a more advanced frontend technology.

- Expected size: 350h

- Mentors: David Roldán (david.roldan AT urjc.es) and Jose María Cañas (josemaria.plaza AT urjc.es)

Project #7: improvement of VisualCircuit web service

Brief Explanation: VisualCircuit allows users to program robotic intelligence using a visual language which consists of blocks and wires, as electronic circuits. In the last year, including GSoC-2021, the Graphical User Interface of this tool was migrated to REACT and a webserver was developed to provide its functionality as a web service. Anyone can try it out without going through the process of local installation. Only the ROS and Gazebo drivers are required locally to run the circuit developed at VisualCircuit webpage. Web server → Downloaded circuit file → Execute that file. You can read further about the tool on the website. In this way, VisualCircuit will be cross-platform, attract new users and be very convenient to use.

We want to refine this tool developing several robot applications with it involving ROS2 robots and Gazebo simulator. Not only pure reactive robot applications but also more complex ones involving Finite State Machines, several different robot types (drones, indoor, outdoor…), and vision-based applications. In addition the Python implementation of blocks and wires from the visual design will be also refactored, hopefully achieving an cross-platform implementation which works both in both Linux and Windows machines.

- Skills required/preferred: Python, Django and some basic JavaScript knowledge

- Difficulty rating: medium

- Expected results: better Python synthesis and a set of robot applications developed with VisualCircuit

- Expected size: 175h

- Mentors: Muhammad Taha (mtsg09 AT gmail.com) and Suhas Gopal (suhas.g96.sg AT gmail.com)

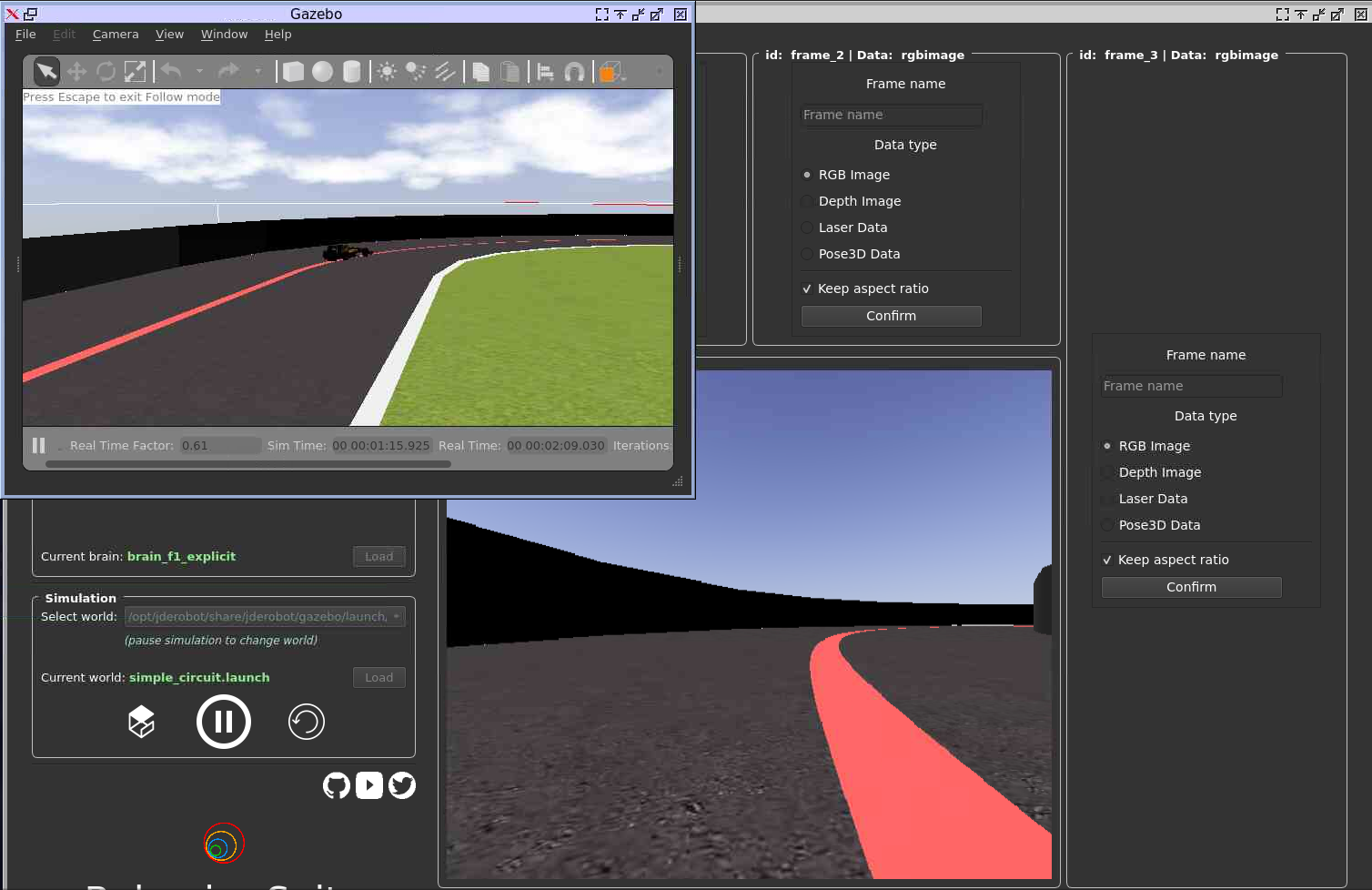

Project #8: optimization of Deep Learning models for autonomous driving

Brief explanation: BehaviorMetrics is a tool used to compare different autonomous driving architectures. It uses Gazebo, a well-known really powerful robotics simulator and ROS Noetic. We are currently able to drive a car autonomously on different circuits using deep learning models to do the robot control processing. Check out the videos below to get an insight.

For this project, we would like to improve the current model stack based on deep learning, applying some optimization techniques to make them run faster without losing precision. Since the hardware devices used to do the inference in real world scenarios usually have a small amount of compute capabilities, we would like to apply speed up techniques on our models or explore new model architectures with low inference time. Some references:

- Skills required/preferred: Python and deep learning knowledge (Tensorflow/PyTorch).

- Difficulty rating: medium

- Expected results: new autonomous driving deep learning models optimized for lower inference time.

- Expected size: 175h

- Mentors: Sergio Paniego Blanco (sergiopaniegoblanco AT gmail.com) and Utkarsh Mishra ( utkarsh75477 AT gmail.com )

Project #9: DeepLearning models for autonomous drone piloting and support in BehaviorMetrics

Brief Explanation: BehaviorMetrics is a tool used to compare different autonomous driving architectures. It uses Gazebo, a well-known really powerful robotics simulator and ROS Noetic. During a past GSoC 2021 project, we explored the addition of drone support for Behavior Metrics. We were able to add this support for IRIS drone, so we would like to exploring adding autonomous drone piloting techniques based on deep learning for this configuration.

We would like to generate new drone scenarios specially suited for the robot control problem, train deep learning models based on state of the art architectures and refine this support in the BehaviorMetrics stack. The drone 3D control is a step forward from the 2D car control, as there is an additional degree of freedom and the onboard camera orientation typically significantly oscillates while the vehicle is moving in 3D.

- Skills required/preferred: Python and deep learning knowledge (specially PyTorch).

- Difficulty rating: hard

- Expected results: broader support for the autonomous driving drone using Gazebo and PyTorch.

- Expected size: 350 hours

- Mentors: Sergio Paniego Blanco (sergiopaniegoblanco AT gmail.com) and Utkarsh Mishra ( utkarsh75477 AT gmail.com )

Project #10: Robotics Academy: migration of several exercises from ROS1 to ROS2 and refinement

Brief Explanation: Currently most RoboticsAcademy exercises are based on ROS1 Noetic and Gazebo 11. There are also several prototypes of ROS2 Foxy based exercises which require refinement. The main goal of this project is to migrate several RADI-3 exercises to RADI-4, updating the models of the robots involved in those exercises to their homologous model in ROS2. This will require understanding the complete infrastructure and modifying exercises to use ROS2 communications. In addition, the support for several ROS tools (such as rqt_graph and Rviz) from the corresponding exercise webpages should be implemented (using VNC mainly). New exercises integrating the ROS2 Navigation stack are also welcome, which involve the use of functionalities such as collision avoidance, global path planning, and Multi-robot coordination.

ROS2 has put forward several improvements over ROS with changes in middleware and software architecture in many aspects. In this project, we would focus on developing new exercises with ROS2. For more information on ROS2 based exercises, have a look at GSoC 2021 project and corresponding academy exercises 1 and 2. In addition to porting exercises, contributors are also welcome to suggest improvements to the current RADI framework.

- Skills required/preferred: C++, Python programming skills, experience with ROS. Good to know: ROS2

- Difficulty rating: hard

- Expected results: Migrating the current web template exercises from (ROS1 based) RADI-3 to (ROS2 based) RADI-4

- Expected size: 350 hours

- Mentors: Siddharth Saha (sahasiddharth611 AT gmail.com) and Shreyas Gokhale (shreyas6gokhale AT gmail.com).

Application instructions for GSoC-2022

We welcome students to contact relevant mentors before submitting their application into GSoC official website. If in doubt for which project(s) to contact, send a message to jderobot AT gmail.com We recommend browsing previous GSoC student pages to look for ready-to-use projects, and to get an idea of the expected amount of work for a valid GSoC proposal.

Requirements

- Git experience

- C++ and Python programming experience (depending on the project)

Programming tests

| Project | #1 | #2 | #3 | #4 | #5 | #6 | #7 | #8 | #9 | #10 |

| Academy (A) | X | X | X | X | X | X | X | X | X | X |

| C++ (B) | O | O | O | O | O | O | O | O | O | X |

| Python (C) | X | X | X | X | X | X | X | X | X | X |

| ROS2 (D) | O | O | O | O | O | - | - | - | - | X |

| Where: | |

| * | Not applicable |

| X | Mandatory |

| O | Optative |

Before accepting any proposal all candidates have to do these programming challenges:

- (A) RoboticsAcademy challenge

- (B) C++ challenge

- (C) Python challenge

- (D) ROS2 challenge

Send us your information

AFTER doing the programming tests, fill this web form with your information and challenge results. Then you are invited to ask the project mentors about the project details. Maybe we will require more information from you like this:

- Contact details

- Name and surname:

- Country:

- Email:

- Public repository/ies:

- Personal blog (optional):

- Twitter/Identica/LinkedIn/others:

- Timeline

- Now split your project idea in smaller tasks. Quantify the time you think each task needs. Finally, draw a tentative project plan (timeline) including the dates covering all period of GSoC. Don’t forget to include also the days in which you don’t plan to code, because of exams, holidays etc.

- Do you understand this is a serious commitment, equivalent to a full-time paid summer internship or summer job?

- Do you have any known time conflicts during the official coding period?

- Studies

- What is your School and degree?

- Would your application contribute to your ongoing studies/degree? If so, how?

- Programming background

- Computing experience: operating systems you use on a daily basis, known programming languages, hardware, etc.

- Robot or Computer Vision programming experience:

- Other software programming:

- GSoC participation

- Have you participated to GSoC before?

- How many times, which year, which project?

- Have you applied but were not selected? When?

- Have you submitted/will you submit another proposal for GSoC 2022 to a different org?

Previous GSoC students

- Suhas Gopal (GSoC-2021) Shifting VisualCircuit to a web server

- Utkarsh Mishra (GSoC-2021) Autonomous Driving drone with Gazebo using Deep Learning techniques

- Siddharth Saha (GSoC-2021) Robotics Academy: multirobot version of the Amazon warehouse exercise in ROS2

- Shashwat Dalakoti (GSoC-2021) Robotics-Academy: exercise using Deep Learning for Visual Detection

- Arkajyoti Basak (GSoC-2021) Robotics Academy: new drone based exercises

- Chandan Kumar (GSoC-2021) Robotics Academy: Migrating industrial robot manipulation exercises to web server

- Muhammad Taha (GSoC-2020) VisualCircuit tool, digital electronics language for robot behaviors.

- Sakshay Mahna (GSoC-2020) Robotics-Academy exercises on Evolutionary Robotics.

- Shreyas Gokhale (GSoC-2020) Multi-Robot exercises for Robotics Academy In ROS2.

- Yijia Wu (GSoC-2020) Vision-based Industrial Robot Manipulation with MoveIt.

- Diego Charrez (GSoC-2020) Reinforcement Learning for Autonomous Driving with Gazebo and OpenAI gym.

- Nikhil Khedekar (GSoC-2019) Migration to ROS of drones exercises on JdeRobot Academy

- Shyngyskhan Abilkassov (GSoC-2019) Amazon warehouse exercise on JdeRobot Academy

- Jeevan Kumar (GSoC-2019) Improving DetectionSuite DeepLearning tool

- Baidyanath Kundu (GSoC-2019) A parameterized automata Library for VisualStates tool

- Srinivasan Vijayraghavan (GSoC-2019) Running Python code on the web browser

- Pankhuri Vanjani (GSoC-2019) Migration of JdeRobot tools to ROS 2

- Pushkal Katara (GSoC-2018) VisualStates tool

- Arsalan Akhter (GSoC-2018) Robotics-Academy

- Hanqing Xie (GSoC-2018) Robotics-Academy

- Sergio Paniego (GSoC-2018) PyOnArduino tool

- Jianxiong Cai (GSoC-2018) Creating realistic 3D map from online SLAM result

- Vinay Sharma (GSoC-2018) DeepLearning, DetectionSuite tool

- Nigel Fernandez GSoC-2017

- Okan Asik GSoC-2017, VisualStates tool

- S.Mehdi Mohaimanian GSoC-2017

- Raúl Pérula GSoC-2017, Scratch2JdeRobot tool

- Lihang Li: GSoC-2015, Visual SLAM, RGBD, 3D Reconstruction

- Andrei Militaru GSoC-2015, interoperation of ROS and JdeRobot

- Satyaki Chakraborty GSoC-2015, Interconnection with Android Wear

How to increase your chances of being selected in GSoC-2022

If you put yourself in the shoes of the mentor that should select the student, you’ll immediately realize that there are some behaviors that are usually rewarded. Here’s some examples.

-

Be proactive: Mentors are more likely to select students that openly discuss the existing ideas and / or propose their own. It is a bad idea to just submit your idea only in the Google web site without discussing it, because it won’t be noticed.

-

Demonstrate your skills: Consider that mentors are being contacted by several students that apply for the same project. A way to show that you are the best candidate, is to demonstrate that you are familiar with the software and you can code. How? Browse the bug tracker (issues in github of JdeRobot project), fix some bugs and propose your patch submitting your PullRequest, and/or ask mentors to challenge you! Moreover, bug fixes are a great way to get familiar with the code.

-

Demonstrate your intention to stay: Students that are likely to disappear after GSoC are less likely to be selected. This is because there is no point in developing something that won’t be maintained. And moreover, one scope of GSoC is to bring new developers to the community.

RTFM

Read the relevant information about GSoC in the wiki / web pages before asking. Most FAQs have been answered already!

- Full documentation about GSoC on official website.

- FAQ from GSoC web site.

- If you are new to JdeRobot, take the time to familiarize with the JdeRobot.