GSoC 2018

JdeRobot is a software suite for developing robotics and computer vision applications. These domains include sensors (for instance, cameras), actuators, and intelligent software in between. It is mostly written in C++, Python and JavaScript languages and provides a distributed component-based programming environment where the application program is made up of a collection of several concurrent asynchronous components. They may run in different computers and are connected using ICE communication middleware or ROS messages. Components interoperate through explicit interfaces. Each one may have its own independent Graphical User Interface or none at all. It is ROS-friendly and full compatible with ROS-Kinetic.

Selected students

In the year 2018, the students selected for the Google Summer of Code have been the following:

Vinay Sarma

Improving DetectionSuite tool including segmentation and classification tools

Ideas List

JdeRobot simplifies the access to hardware devices from the control program. Getting sensor measurements is as simple as calling a local function, and ordering motor commands as easy as calling another local function. The platform attaches those calls to the remote invocation on the components connected to the sensor or the actuator devices. They can be connected to real sensors and actuators or simulated ones, both locally or remotely using the network. Those functions build the API for the Hardware Abstraction Layer. The robotic application get the sensor readings and order the actuator commands using it to unfold its behavior. Several driver components have been developed to support different physical sensors, actuators and simulators. The drivers are used as components installed at will depending on your configuration. They are included in the official release.

This open source project needs further development in these topics:

Project #1: JdeRobot-Academy: fleet of robots for Amazon logistics store

Brief Explanation: JdeRobot-Academy is an framework for robotics and computer vision teaching, built on JdeRobot. It is composed of a collection of cool exercises in Python about robot programming and computer vision. One nice exercise to be included is the navigation of a fleet of robots, their path planning and coordination. The scenario is an Amazon warehouse, where the fleet of Kiva robots should autonomously move the goods from the providers input to the storing location and from there to the output bay.

The robot model in Gazebo has to be developed, also the Python template node (with its GUI) that will host the student code and a tentative solution.

- Expected results: a new robotics exercise in Gazebo.

- Knowledge Prerequisite: Python programming skills.

- Mentors: Alberto Martín

alberto.martinf@urjc.es.

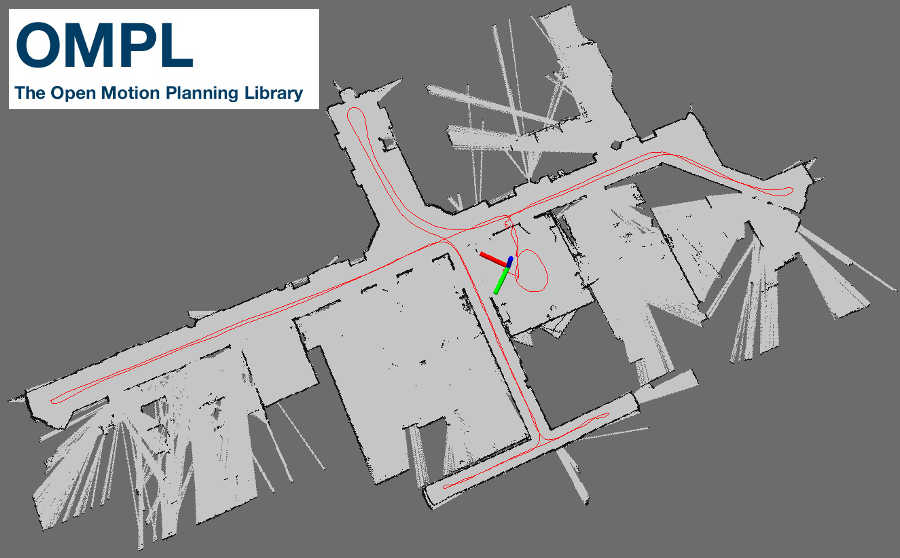

Project #2: JdeRobot-Academy: robot navigation using Open Motion Planning Library

Brief Explanation: JdeRobot-Academy is an academic framework for teaching robotics and computer vision. It includes several exercises where each one includes a Python application that connects to the real or simulated robot and provides a template that the students have to fill with their code for the robot algorithms.

The main idea of this project is to introduce the OMPL (Open Motion Planning Library) into JdeRobot-Academy, in a new robot navigation exercise. For this task, the student will develop a new exercise and their solutions using different path planning algorithms of an autonomous wheeled robot or drone which moves along a known scenario in Gazebo.

- Expected results: a new robotics exercise in Gazebo.

- Knowledge Prerequisite: Python programming skills.

- Mentors: Alberto Martín (alberto.martinf AT urjc.es).

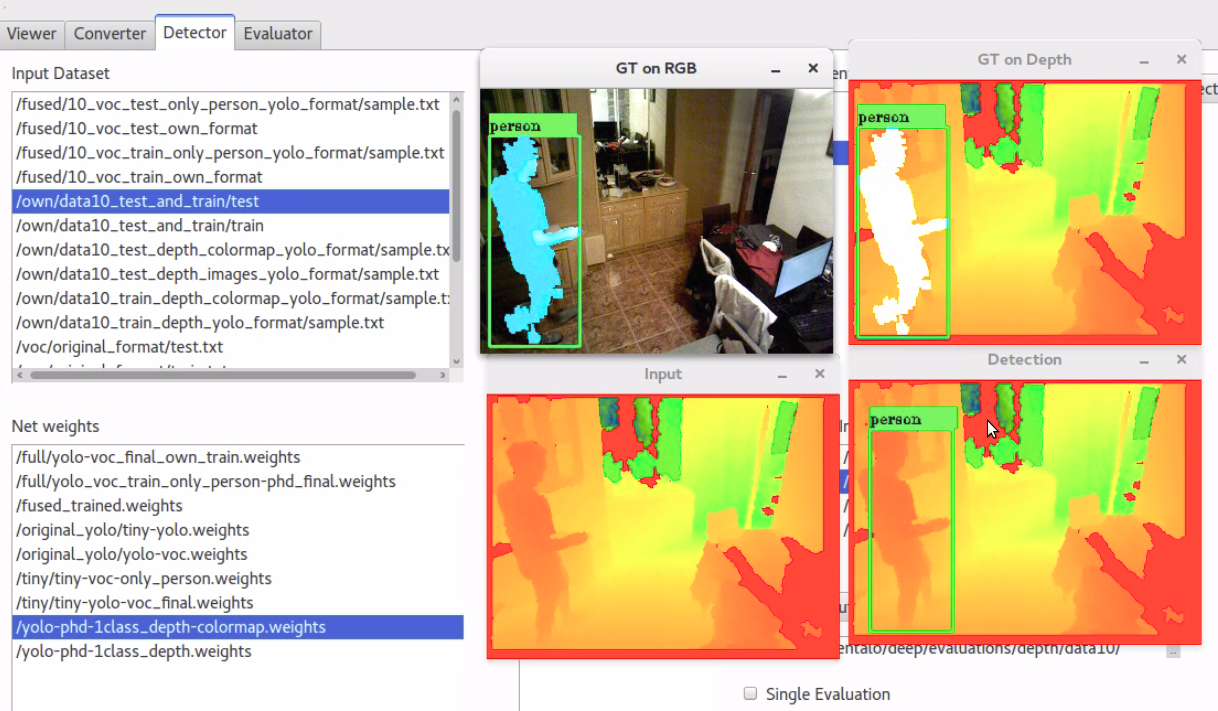

Project #3: Improving DetectionSuite deep learning tool including segmentation and classification tools

Brief Explanation: DetectionSuite is an on-development tool to test and train different DeepLearning architectures for object detection on images. It accepts several known international datasets like PASCALVOC and allows the comparison of several DeepLearning architectures over exactly the same test data. It computes several objective statistics and measures their performance. Currently it support YOLO architectures on Darknet framework. The goal of this project is to expand the supported datasets (ImageNet, COCO…) and expand the neural frameworks (Keras, TensorFlow, Caffe…).

In addition several detection architectures should be trained and compared with the new release of the tool.

- Expected results: a new release of DetectionSuite tool extending the existing functionality for objection but also for two new deep learning problems: classification and segmentation including new statistics for each of them.

- Knowledge Prerequisite: C++ and Python programming skills.

- Mentors: Francisco Rivas (franciscomiguel.rivas AT urjc.es).

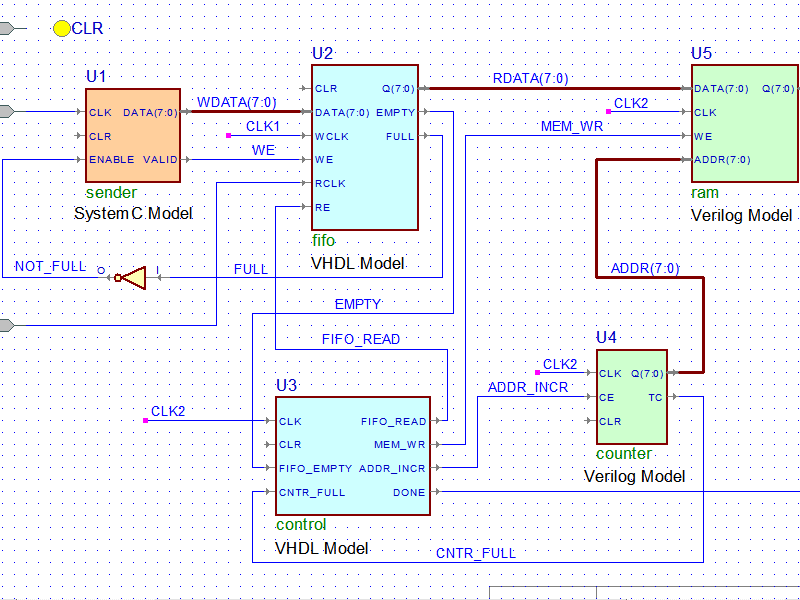

Project #4: Improving VisualStates tool, full compatibility with ROS

Brief Explanation: VisualStates is a tool for programming robot behaviors using automata. It combines a graphical language to specify the states and the transitions with a text language (Python or C++). It generates a ROS node which implements the automata and shows a GUI at runtime with the active state every time, for debugging. Take a look at some example videos. The current state of VisualStates only supports subscription and publish for topics. We aim to integrate all the communication features of the ROS and also basic packages that would be useful for behavior development. In the scope of this project the following improvements are targeted:

- The integration of ROS services, the behaviors will be able to call ROS services.

- The integration of ROS actionlib, the behaviors will be able to call actionlib services.

- The generating and reading smach behaviors in VisualStates and modify and generate new behaviors.

- Expected results: a new release of VisualStates tool.

- Knowledge Prerequisite: Python programming skills.

- Mentors: Okan Asik (Okan Aşık

asik.okan@gmail.com).

Project #5: Improving VisualStates tool, library of parameterized automata

Brief Explanation: VisualStates is a tool for programming robot behaviors using automata. It combines a graphical language to specify the states and the transitions with a text language (Python or C++). It generates a ROS node which implements the automata and shows a GUI at runtime with the active state every time, for debugging. Take a look at some example videos.

Every automaton created using VisualStates can be seen as a state itself and then be integrated in a larger automata. Therefore, the user would be able to add previously created behaviors as states. When importing those behaviors, the user would have two options; copying the behavior on the new behavior or keeping reference to the imported automata such that if it is changed, those changes are going to be reflected on the new behavior too. The idea of this project is to built and support an automata library. There will be a library of predefined behaviors (automata) for coping with usual tasks, so the user can just integrate them as new states on a new automata, without writing any code. In addition, each automaton may accept parameters to fine tune its behavior. For example, for moving forward a drone, we’ll have a state ‘moveForward’, so the user only have to import that state indicating as a parameter the speed he wants.

- Expected results: a new release of VisualStates tool.

- Knowledge Prerequisite: Python and C++ programming skills.

- Mentors: Okan Asik (Okan Aşık asik.okan@gmail.com).

Project #6: Improving SLAM-testbed tool

Brief Explanation: Simultaneous Localization and Mapping (SLAM) algorithms play a fundamental role for emerging technologies, such as autonomous cars or augmented reality, providing an accurate localization inside unknown environments. There are many approaches available with different characteristics in terms of accuracy, efficiency and robustness (ORB-SLAM, DSO, SVO, etc), but their results depend on the environment and resources available.

SLAM-testbed is a graphic tool to compare objectively different Visual SLAM approaches, evaluating them using several public benchmarks and statistical treatment, in order to compare them in terms of accuracy and efficiency. The main goal of this project is to increase the compatibility of this tool with new benchmarks and SLAM algorithms, so that it becomes an standard tool to evaluate future approaches.

The next video shows one of the SLAM algorithms (called ORB-SLAM) that will be evaluated with this tool:

- Expected results: Add new benchmarks and SLAM algorithms to SLAM-testbed tool.

- Knowledge Prerequisite: C++ programming skills

- Mentors: Eduardo Perdices (eperdices AT gsyc.es).

Project #7: Create realistic 3D maps from SLAM algorithms

Brief Explanation: Simultaneous Localization and Mapping (SLAM) algorithms play a fundamental role for emerging technologies, such as autonomous cars or augmented reality. These algorithms provide accurate localization inside unknown environments, however, the maps obtained with these techniques are often sparse and meaningless, composed by thousands of 3D points without any relation between them.

The goal of this project is to process the data obtained from SLAM approaches and create a realistic 3D map. The input data will consist of a dense 3D point cloud and a set of frames located in the map.

The next video shows one of the SLAM algorithms (called DSO) whose output data will be used to create the 3D map:

- Expected results: A realistic 3D map from a 3D point cloud and frames.

- Knowledge Prerequisite: Python or C++ programming skills

- Mentors: Eduardo Perdices (eperdices AT gsyc.es)

Project #8: JdeRobot-Kids: compiling Python to Arduino

Brief Explanation: JdeRobot-Kids is an academic framework for teaching robotics to children in a practical way. It is based on Python, the kids have to program typical robot behaviors like follow-line using Python. JdeRobot-Kids is now mostly centered in the mbot robot, from makeblock, both the real robot and the simulated one in Gazebo. Mbot is an Arduino based robot. Currently the student application runs at a regular computer, which is connected to the onboard Arduino. Arduino and PC interact using the Firmata protocol. This approach requires a continuous connection between the robot and the off-board computer. Arduino is limited on computer power so it is not enough to run a Python interpreter. The goal of this project is to “compile” the Python application to Arduino microprocessor. This way the kid program can be fully downloaded on the Mbot robot and run completely autonomous. Another possibility is to translate Python application to C/C++, as gcc/g++ already compiles it to Arduino microprocessor. Some ideas to explore are: LLVM compiler infrastructure, cython…

- Expected results: Python application running on an Arduino microprocessor.

- Knowledge Prerequisite: Python, compilers.

- Mentors: JoséMaría Cañas (josemaria.plaza AT urjc.es) and Luis Roberto Morales (lr.morales.iglesias AT gmail.com).

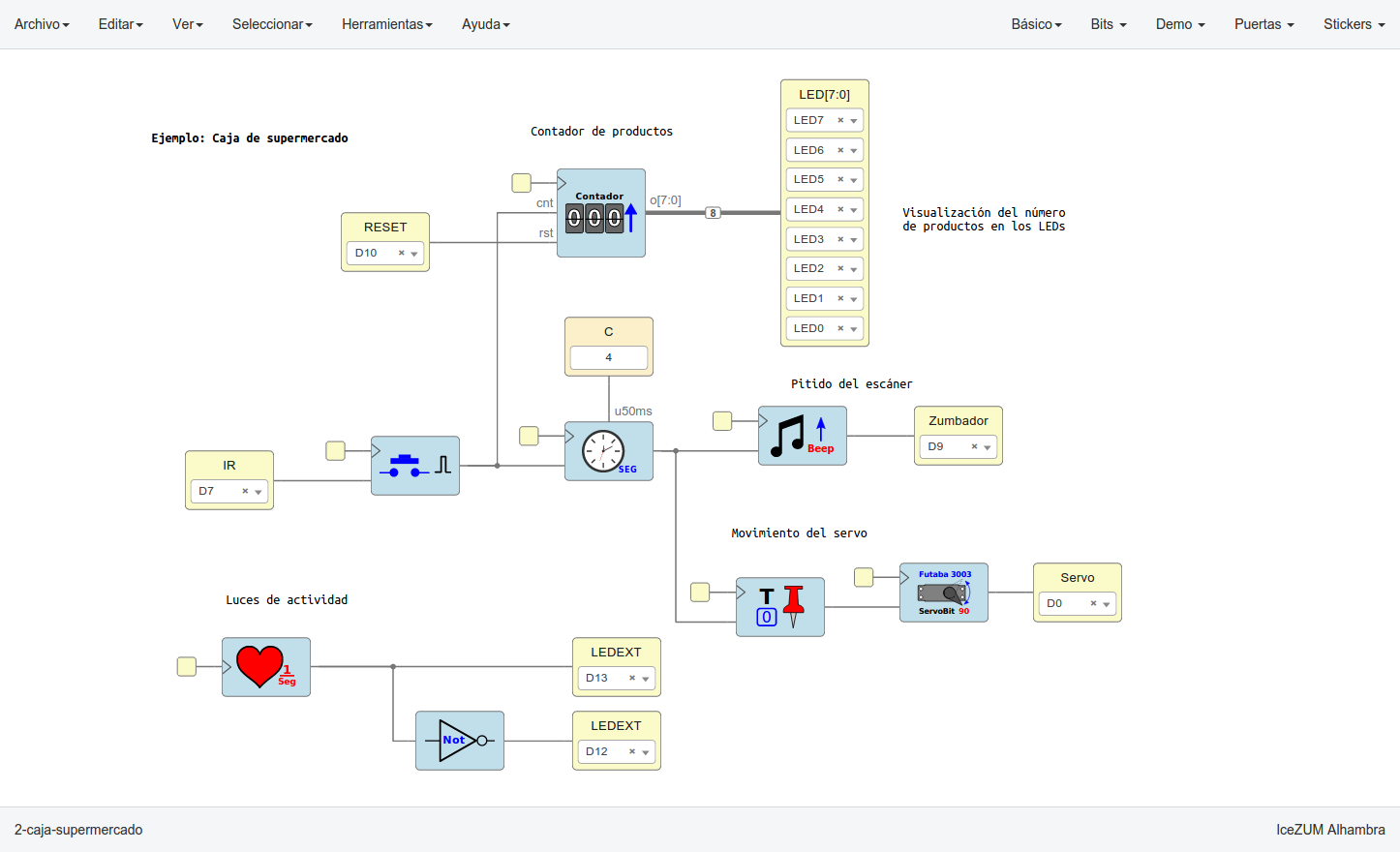

Project #9: VisualCircuit tool, digital electronics language for robot behaviors

Brief Explanation: In reconfigurable circuits (FPGAs) a hardware description language is used to visually specify the circuit configuration and its behavior. For instance, the open source IceStudio tool uses such visual language to configure FPGAs. The idea of this project is to explore the use of the same visual language to program reactive robot behaviors. There are blocks (existing circuits) and wires to connect their inputs and outputs. Instead of synthesizing the visual program into a FPGA implementation the goal is to synthesize it into a Python program. Each block is translated into a thread that runs a transforming function at fast iterations. Each iteration reads the block inputs, does some specific processing to compute the right values and writes them on its outputs. Each wire is translated into a shared variable where the blocks can write or read.

- Expected results: a new tool to program reactive robot behaviors using a visual language based on blocks and wires.

- Knowledge Prerequisite: Python programming skills.

- Mentors: JoséMaría Cañas (josemaria.plaza AT urjc.es), Samuel Rey (samuel.rey.escudero AT gmail.com).

Application instructions for GSoC-2018

We welcome students to contact relevant mentors before submitting their application into GSoC official website. If in doubt for which project(s) to contact, send the mail to jmplaza AT gsyc.es and to franciscomiguel.rivas AT urjc.es, and almartinflorido AT gmail.com We recommend browsing previous GSoC student pages to look for ready-to-use projects, and to get an idea of the expected amount of work for a valid GSoC proposal.

Requirements

- Git experience

- C++ and Python programming experience (depending on the project)

Programming tests

| Project | #1 | #2 | #3 | #4 | #5 | #6 | #7 | #8 | #9 |

|---|---|---|---|---|---|---|---|---|---|

| JdeRobot (A) | X | X | X | X | X | X | X | X | X |

| C++ (B) | * | * | * | X | * | X | O | O | X |

| Python (C) | X | X | X | X | X | * | O | O | X |

Legend

| Icon | Description |

|---|---|

| * | Not applicable |

| X | Mandatory |

| O | Optative, you must choose one of them |

Before accepting any proposal all candidates have to do these programming challenges:

- (A) JdeRobot installation challenge.

- (B) C++ challenge.

- (C) Python challenge

Send us your information

After doing the programming tests, fill this web form with your information and challenge results. Then you are invited to ask the project mentors about the project details. Maybe we will require more information from you like this:

- Contact details

- Name and surname:

- Country:

- Email:

- Public repository/ies:

- Personal blog (optional):

- Twitter/Identica/LinkedIn/others:

- Timeline

- Now split your project idea in smaller tasks. Quantify the time you think each task needs. Finally, draw a tentative project plan - (timeline) including the dates covering all period of GSoC. Don’t forget to include also the days in which you don’t plan to code, - because of exams, holidays etc.

- Do you understand this is a serious commitment, equivalent to a full-time paid summer internship or summer job?

- Do you have any known time conflicts during the official coding period?

- Studies

- What is your School and degree?

- Would your application contribute to your ongoing studies/degree? If so, how?

- Programming background

- Computing experience: operating systems you use on a daily basis, known programming languages, hardware, etc.

- Robot or Computer Vision programming experience:

- Other software programming:

- GSoC participation

- Have you participated to GSoC before?

- How many times, which year, which project?

- Have you applied but were not selected? When?

- Have you submitted/will you submit another proposal for GSoC 2017 to a different org?

Previous GSoC students

- Nigel Fernandez GSoC-2017

- Okan Asik GSoC-2017, VisualStates tool

- S.Mehdi Mohaimanian GSoC-2017

- Raúl Pérula GSoC-2017, Scratch2JdeRobot tool

- Lihang Li: GSoC-2015, Visual SLAM, RGBD, 3D Reconstruction

- Andrei Militaru GSoC-2015, interoperation of ROS and JdeRobot

- Satyaki Chakraborty GSoC-2015, Interconnection with Android Wear

How to increase your chances of being selected in GSoC-2018

If you put yourself in the shoes of the mentor that should select the student, you’ll immediately realize that there are some behaviors that are usually rewarded. Here’s some examples.

-

Be proactive: Mentors are more likely to select students that openly discuss the existing ideas and / or propose their own. It is a bad idea to just submit your idea only in the Google web site without discussing it, because it won’t be noticed.

-

Demonstrate your skills: Consider that mentors are being contacted by several students that apply for the same project. A way to show that you are the best candidate, is to demonstrate that you are familiar with the software and you can code. How? Browse the bug tracker (issues in github of JdeRobot project), fix some bugs and propose your patch submitting your PullRequest, and/or ask mentors to challenge you! Moreover, bug fixes are a great way to get familiar with the code.

-

Demonstrate your intention to stay: Students that are likely to disappear after GSoC are less likely to be selected. This is because there is no point in developing something that won’t be maintained. And moreover, one scope of GSoC is to bring new developers to the community.

Read the relevant information about GSoC in the wiki / web pages before asking. Most FAQs have been answered already!

- Full documentation about GSoC on official website.

- FAQ from GSoC web site.

- If you are new to JdeRobot, take the time to familiarize with the JdeRobot.